|

Voiced by Amazon Polly |

Introduction

Security best practices require effective logging across various resources and security event data management processes to centralize and analyze data. Logs from firewalls, on-premises infrastructure, and from cloud services such as Amazon VPC, Amazon CloudTrail are collected into Amazon S3 and AWS Lake Formation to simplify the management of the AWS data lake. But still, it is difficult to implement security domain-specific aspects such as data ownership, data normalization and data enrichment. Amazon Security Lake can be used to analyze security data to get a complete insight into your security across the entire organization. With Amazon Security Lake, you can create a purpose-built customer data lake that automatically centralizes security data from on-premises, custom sources, and the cloud. It also helps to protect your applications, workloads, and data.

In this blog, we discuss Amazon Security Lake, a new service launched in November 2022 to centralize, manage and optimize large volumes of logs and event data to enable incident response, threat detection and investigation to address security issues and analyze them using preferred analytics tools.

Customized Cloud Solutions to Drive your Business Success

- Cloud Migration

- Devops

- AIML & IoT

Challenges for Security Team

Customers want to protect their entire organization from future security events by identifying potential threats and vulnerabilities, assessing security alerts and responding accordingly by collecting logs and event data from different data sources. To gather the security insights from the data, the data needs to be aggregated and normalized into a consistent form. It is very time–consuming and costly, as customers use different security solutions for specific use cases, which has their own data stores and format. There are four challenges faced by the security team while analyzing the organization–wide security data:

- Large Volumes of Security Data: The logs and data events are collected from various data sources from on-premises infrastructure, cloud and custom sources; a huge amount of data is collected over a short span of time. To get effective security insights from aggregated data, sometimes it’s necessary to store data for a long duration, which leads to storage in GBs or even in TBs.

- Inconsistent and Incomplete Data: As the logs and event data are collected from different sources, different types of logs have different formats, and it is difficult to query on it. If you fail to get logs data, you will lose visibility into it. It is important to properly configure security solutions for your applications and workloads. Also, some security solutions store logs only for a specific period, like 30 days, but what if we need data for a longer period?

- Lack of Data Ownership: Another challenge is the lack of direct ownership of the data. Customers ingest the security data to analytic solutions to get insights, because of which data is insulated from the security industry. Many innovations happening in the security industry need data ownership.

- More Data Wrangling, less analysis: It is necessary to track infrastructure changes, generate alarms, get performance and normalize data regularly requires more manpower. It is complex to achieve this in a defined budget and leads to data wrangling instead of accurate analysis.

The solution to automate the security data analysis in your entire organization is Amazon Security Lake.

Amazon Security Lake

Amazon Security Lake automatically centralizes security data into a purpose-built data lake in your account. It aggregates, normalizes, and manages security data across your entire organization into a security data lake, which further helps to analyze security data using preferred analytics solutions.

Features of Amazon Security Lake

Data aggregation: Amazon Security Lake creates a purpose-built security data lake in your account, collects logs and event data from various sources like cloud, on-premises and custom sources, and stores it in Amazon S3 buckets so that you have control and ownership of your security data.

Data Normalization and Support for OCSF: Security Lake has adopted an open standard, the Open Cybersecurity Schema Framework (OCSF), to normalize and combine security data from a range of enterprise security data sources and AWS. You can aggregate and normalize data from Amazon VPC Flow Logs, AWS CloudTrail Management events, Amazon Route 53 Resolver query logs and security findings from solutions integrated through the AWS security hub as well as from custom data and third-party security solutions into OCSF format. With support for OCSF, Security Lake makes security data available to your preferred analytics tool.

Multi-account and multi-region support: Amazon Security Lake service can be enabled across multiple accounts and multiple regions where service is available. Security data across accounts can be aggregated on a per-region basis or consolidated from multiple regions into rollup regions for compliance requirements.

Data lifecycle management and optimization: The lifecycle of security data is managed by setting the retention period and storage costs with automated tiering using Amazon Security Lake. It also automatically partitions and converts security data to storage and query efficient Apache Parquet format.

Configure Amazon Security Lake for Security Data collection

Prerequisite to configure Amazon Security Lake

- To get started with Amazon Security Lake, first, delegate an AWS account with it from the management account of AWS Organization. The delegated account is used to enable Amazon Security Lake, which aggregates security data across multiple accounts and multiple regions. You can also enable Amazon Security Lake for a standalone AWS account.

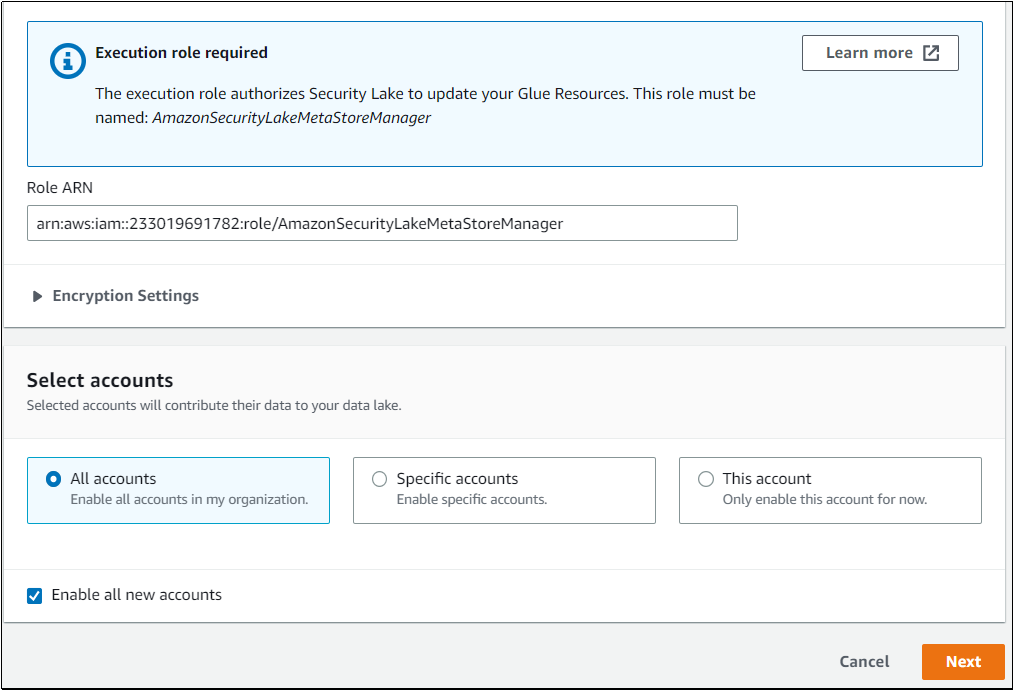

- To enable Amazon Security Lake to perform ETL (Extract, Transform and Load) jobs on logs and event data from various sources, create a role named AmazonSecurityLakeMetaStoreManager, so you can be able to create a data lake or query data.

Once the AWS account is delegated to enable Amazon Security Lake and the role is created, you can configure Security Data Lake in your account to aggregate, normalize and manage security data from various data sources.

Step 1: Define the collection Objective: To enable Amazon Security Lake, first select data sources, regions, and accounts and specify the role ARN created in the prerequisite.

Step 2: Define Target Objective: In this step, you define the rollup region and set storage classes, if required so that data is ingested from multiple regions and accounts in your organization.

Step 3: In Sources options, you can enable different data sources like CloudTrail, VPC Flow Logs, Route 53 and Security Hub Findings in all or a specific region.

Step 4: You can view the Regions in which buckets are created and can view the buckets for logs stored in Apache Parquet format.

Conclusion

Amazon Security Lake is a fully managed security data lake service which automatically centralizes, normalizes, manages, and analyzes security data from various sources like AWS and third-party into a data lake stored in your AWS account. It is easy to enable and aggregate logs and event data from the cloud, on-premises, and custom sources in a few clicks

References

Get your new hires billable within 1-60 days. Experience our Capability Development Framework today.

- Cloud Training

- Customized Training

- Experiential Learning

About CloudThat

CloudThat is an official AWS (Amazon Web Services) Advanced Consulting Partner and Training partner, AWS Migration Partner, AWS Data and Analytics Partner, AWS DevOps Competency Partner, Amazon QuickSight Service Delivery Partner, AWS EKS Service Delivery Partner, and Microsoft Gold Partner, helping people develop knowledge of the cloud and help their businesses aim for higher goals using best-in-industry cloud computing practices and expertise. We are on a mission to build a robust cloud computing ecosystem by disseminating knowledge on technological intricacies within the cloud space. Our blogs, webinars, case studies, and white papers enable all the stakeholders in the cloud computing sphere.

To get started, go through our Training page and Managed Services Package, CloudThat’s offerings

WRITTEN BY Rashmi D

Rashmi Dhumal is working as a Subject Matter Expert in AWS Team at CloudThat, India. Being a passionate trainer, “technofreak and a quick learner”, is what aptly describes her. She has an immense experience of 20+ years as a technical trainer, an academician, mentor, and active involvement in curriculum development. She trained many professionals and student graduates pan India.

Login

Login

September 5, 2023

September 5, 2023 PREV

PREV

Comments