|

Voiced by Amazon Polly |

Introduction

Docker is an open-source platform for deploying, building, and managing containerized applications. Docker is a container runtime. Many people think Docker is the first of its kind, but it’s not. Linux containers have been around since the 1970s. Docker is important to both developer and container communities as it has made containers so easy to use that everyone started using them.

What is a Container?

The container can be thought like a server-based application that removes the constraints of hardware and instead becomes a piece of software. Containers can be categorized into 3 categories

- Builder: A builder is used to build a container like Dockerfile for Docker

- Engine: It is an application used to run a container like a docker command

- Orchestration: It is used to manage multiple containers like OKD and Kubernetes

Containerization is all about packaging the requirements of an application under development in the form of a base image. This image can run in an isolated space (containers) on different systems. It is crucial to remember that these containers share the same OS. Most IT leaders are intrigued by this technology because it is often used for deploying and running a distributed app without having to use a Virtual Machine (VM).

Helping organizations transform their IT infrastructure with top-notch Cloud Computing services

- Cloud Migration

- Devops

- AIML & IoT

What is a DockerFile?

A dockerfile is a set of commands a user could call on the command line to assemble and build an image. Dockerfile is essentially a text of instruction to build an image of the application. It helps in keeping the latest updates of the images and ensures a security perspective.

BuildKit decreases build time and increase productivity and consistency.

Note: Use Docker Buildkit as the default

If you want to know more information on Docker here.

Best Practices to Create DockerFile

- Build time (Incremental)

- Image Size

- Reproducibility /Maintainability

- Security Consistency/Repeatability/Concurrency

Let’s learn about the best practices in detail.

- Incremental build time:

- Order matters in caching. Order from least to most frequently changing content

- More specific copy to limit cache busts. Only copy what’s needed. Avoid “COPY .” if possible

- Line buddies: apt-get update & install. Prevents using an outdated package cache

2. Reduce Image size:

- Faster deploys, the smaller the attack surface. Removing unnecessary dependencies

- Removing package manager cache. Remove the package manager which is not necessary after use

Use official images where possible

- Reduce time spent on maintenance (frequently updated with fixes)

- Reduce size (shared layers between images)

- Pre-configured for container use

- Built by smart people

- Use more specific tags. The “latest” tag is a rolling tag. Be specific, to prevent unexpected changes in your base image.

- Look for minimal flavors. Just using a different base image reduced the image size by 540MB

3. Reproducibility:

Build from source in a consistent environment

Make the Dockerfile your blueprint:

- It describes the build environment – Correct versions of build tools installed

- Prevent inconsistencies between environments -There may be system dependencies

- The “source of truth” is the source code, not the build artifact

Multi-stage builds to remove build dependencies

Multi-stage use cases

- Separate build from runtime environment (shrinking image size)

- Slight variations on images (DRY) Build/dev/test/lint/… specific environments

- Delinearizing your dependencies (concurrency)

- Platform-specific stages

From linear Dockerfile stages

- All stages are executed in sequence

- Without BuildKit, unneeded stages are unnecessarily executed but discarded

User multi-stage graphs with BuildKit

- BuildKit traverses from the bottom. (Stage name from –target) to top

- Unnecessary stages are not even considered

4. Concurrency:

- Multi-stage: build concurrently

- Use BuildKit whenever possible

- Enabling new features when released

- Always hide your secret access key and key ID

DOs and DON'Ts

Don’t, always check your docker history for any credentials.

- Private git repos

Don’t,

Do,

A Digest of Best Practices

We went from:

- Inconsistent build/dev/test environments

- Bloated image

- Slow build and incremental build times (cache busts)

- Building insecurely

To:

- Minimal image

- Consistent build/dev/test environments

- Very fast build and incremental build times

- Building more securely

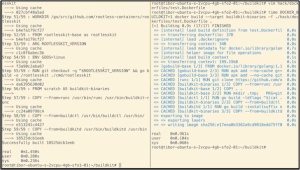

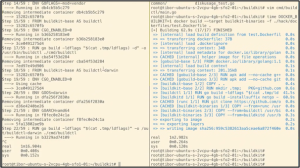

Example for BuildKit:

- Docker file is created in which one has enabled BuildKit and the other disabled BuildKit

- When the build is run we can see the time difference between both builds. BuildKit enabled docker file gets build faster than the other dockerfile.

- When the code is altered for both the files and build again

- We can see that with BuildKit it is already completed whereas another one is still building

- Build stages of both are completed as build 1 took more time and was stopped from the building. We can see the time difference between both.

Conclusion

Docker has a huge community and will continue to thrive in the cloud environment with many benefits for different organizations in their cloud journey. Docker is an essential tool for every developer who embraces the best DevOps practices. We always use docker to build containers and we must know how to create a Dockerfile. I hope this blog helped you in understanding how to use best practices in creating a Dockerfile.

Get your new hires billable within 1-60 days. Experience our Capability Development Framework today.

- Cloud Training

- Customized Training

- Experiential Learning

About CloudThat

CloudThat is also the official AWS (Amazon Web Services) Advanced Consulting Partner and Training partner and Microsoft gold partner, helping people develop knowledge of the cloud and help their businesses aim for higher goals using best in industry cloud computing practices and expertise. We are on a mission to build a robust cloud computing ecosystem by disseminating knowledge on technological intricacies within the cloud space. Our blogs, webinars, case studies, and white papers enable all the stakeholders in the cloud computing sphere.

Drop a query if you have any questions regarding Containerization, Docker, and DevOps, and I will get back to you quickly.

To get started, go through our Consultancy page and Managed Services Package that is CloudThat’s offerings.

FAQs

1. Does Docker run on Linux, macOS, and Windows?

ANS: – It can be run on both Linux and windows and executable on a Docker container. It uses Linux x86-64, ARM, and other CPU architecture on windows it uses x86-64.

2. Do we lose data when a container is exited?

ANS: – No, we do not lose any data which is preserved in the container until and unless we delete the container explicitly.

3. How do we connect the docker container?

ANS: – Docker containers are currently connected using the Docker network feature.

WRITTEN BY Swapnil Kumbar

Swapnil Kumbar is a Research Associate - DevOps. He knows various cloud platforms and has working experience on AWS, GCP, and azure. Enthusiast about leading technology in cloud and automation. He is also passionate about tailoring existing architecture.

Login

Login

September 29, 2022

September 29, 2022

PREV

PREV

Comments